Applying neural networks to robotic vision and guidance

Human beings are excellent at seeing and handling objects. Even small children can pick a blue piece of Lego of a certain size and add it to their creation.

But robots don’t recognize objects the same way we do

Humans see stereoscopically—inputs from our eyes travel to the brain. Those inputs are made into a scene with depth, color, and texture. This is how the child picks up the Lego. Neurons in the brain do the work.

If we can give robots new abilities, they can take on new jobs, or work faster and more repeatably.

Robots guided by 3D vision systems can recognize objects and are generally repeatable. But these workcells are typically slower than humans, struggle with randomness and don’t always take the optimal path. Customized programming and specialized lighting complicate their deployment.

If the existing way of giving robots better sight has reached its limits, what’s the new alternative?

Teach a neural network to see stereoscopically

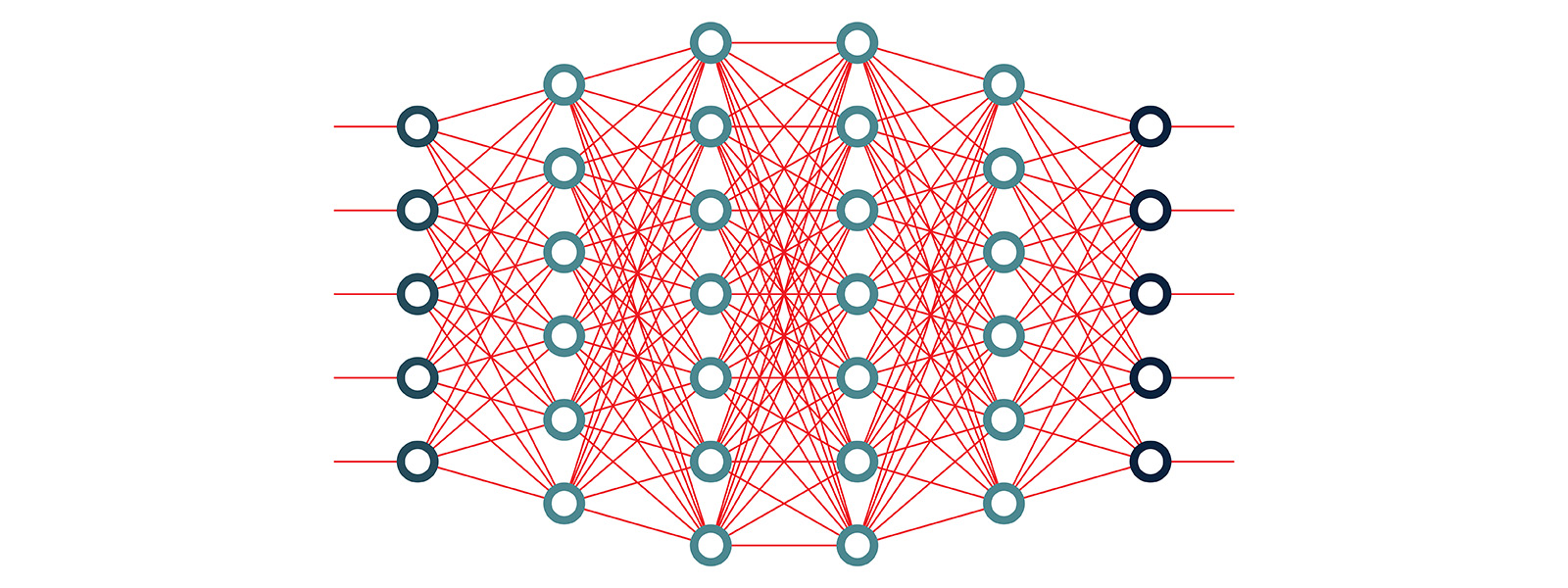

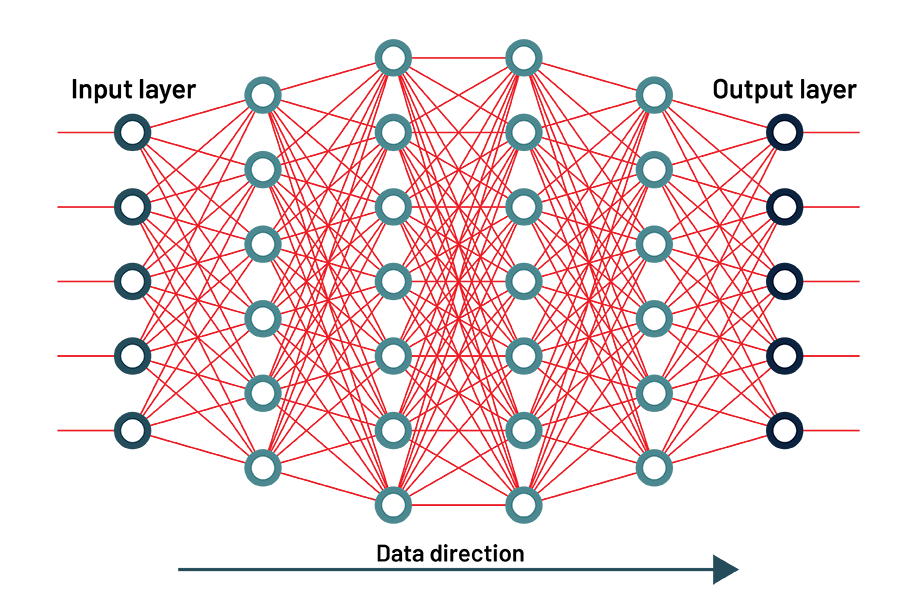

Neural networks offer a powerful method for interpreting 2D images into 3D scenes. They mimic neurons in the human brain and can give robots human-like sight. Apera AI’s engineers take a practical view of neural networks, focusing on how to make them useful for object identification and robotic guidance.

AI is sometimes called a “black box,” but the math can be fully understood. A high school graduate could perform the operations by hand if given enough time.

A neural network is a software function. If you opened the code, you would see a huge list of constants, the numerical values of the image taken by the 2D cameras, an enormous series of multiplications, additions, replacing negative values with zero, and not a lot more.

Much of Apera AI’s core technology involves neural networks. We have a patent on the ability to identify the “most pickable” object after training in a neural network. This attention mechanism follows the human tendency to focus on a single, most obvious object when there is randomness. This is what makes Apera AI’s Vue vision software a faster tool for multiple applications.

The special part of Apera AI’s neural network is how it is written. The human part of building the neural network is defining the problem and how the resulting robotic workcell should perform. Defining the problem involves these questions:

- What is the object geometry?

- What is its material?

- What are the size and characteristics of the bin or area where the objects are located?

- What is the position of the robots?

- What is the height of the cameras?

To train a neural network, we gather a large collection of images along with the desired outputs. For each one, we ask “what tiny change each to this huge list of constants would have made the current output a tiny bit closer to the desired output?” Then we make that change. Often the change is a bad change, but on average they are good changes. Gradually, we have an improving function.

The process involves an accelerated computing machine analyzing a large dataset of images of the object, subject to a high level of variation in each image, including its position and contact against other objects, dimensions, finish (e.g. shiny) and interaction with light.

Synthetic data speeds up the learning process

You may have heard about the human brute force that some machine learning models need. People have to stare at images for hours, teaching the AI by confirming that the photo is of a burrito or a cat or a giraffe. This happens because small additions to the image, like giving an animal the pattern of another animal, can trick the model.

Synthetic data is a path out of this trap. We can create images that parallel the real world, like sockets in a bin, or the assembly of a part.

The alternative to our method is for a programmer to write traditional code. They would collect several images, along with the desired outputs. The logic would be gradually built up, attempting to match actual outputs to desired outputs, until the complexity was overwhelming, and the problem declared too hard.

One advantage of training neural networks is that it’s a data-driven strategy. If one has a function to write, the main requirement is to gather a large set of images and desired outputs. If the function needs to be robust to changes in lighting, the set of images should include changes in lighting.

Additional inputs to Apera’s models can add real-world performance as we better understand what affects a robot’s ability to perform its duties. Some examples:

- Better adaptability to changes in light.

- Understanding that the 2D cameras feeding images to the Apera Vue software are installed at an incorrect angle.

- Identifying the most pickable transparent item out of a pile by seeing how the objects intersect.

What are the conclusions we reach from this information? AI allows us to build sophisticated mathematical models that create human-like vision and speed. Modern image processing technology and computing power makes it all possible. Their inner workings can be reverse engineered, reweighted and fully understood. And AI/ML will become closer and closer to human sight, speed and perception.